The tech industry in 2025 has so far focused primarily on AI and AI transformation. We are all aware of how digital transformation was crucial to business a decade ago. Digital transformation paved the way for businesses to go digital and reach customers in the fastest way possible. Towards the end of the digital transformation is the beginning of the AI transformation.

My immediate response to anyone who discusses AI is first to assess their level of digital transformation. Without digital transformation nearing completion, it is not helpful to even think about AI. And businesses wanting to move towards AI are looking for AI transformation. It’s a journey and not a one-time shift.

I wanted to highlight what I mean by the two terminologies that I increasingly use –

1) Digital transformation – It is a cultural change that an enterprise brings to its employees and consumers, enabling them to increasingly interact using digital channels. This reduces wait time, increases customer satisfaction, organizes data in a more meaningful way, and allows the ability to reach customers as quickly as possible when needed.

2) AI transformation – This is again a cultural change that enterprises bring to their employees and consumers to access the data available within the enterprise in the most effective way with near zero human help by making the interaction between humans and the machines that infer and gather the response back in a more natural language way.

So, who can think about AI? – I see this from the lens of the producer and consumer of the enterprises –

Enterprise customers (Mostly consumers)

1) Enterprises with large volumes of data that are digitally transformed as per their set vision a decade ago

2) Enterprise where increasingly their customers choose to interact with the business using digital channels

3) Enterprises that offer an omnichannel interface for customers to interact (I sound naive here, but having an omnichannel interface for customers to interact with the business helps the customers reach even if the channel that heavily uses AI is not responding – Remember, taking your business to AI is a transformation and not an overnight deployment)

Enterprise employees (Most often producers)

1) Enterprises that are migrated to digital and cloud-based productivity tools for their employees

2) Enterprises that constantly offer ways for employees to learn new technologies, explore and integrate the learning as part of their roles

Big enterprises that have been successful in their AI journey so far are those that have fully embraced digital transformation. Some examples that come to mind include Meta and Google. They introduced some of the powerful models by extending and using the data that they had. Not every enterprise needs to worry about building models, but we can use these models as a base to initiate the AI transformation. However, these are just like the dough of a pizza; we still need the right ingredients in the correct order to serve our specific customers.

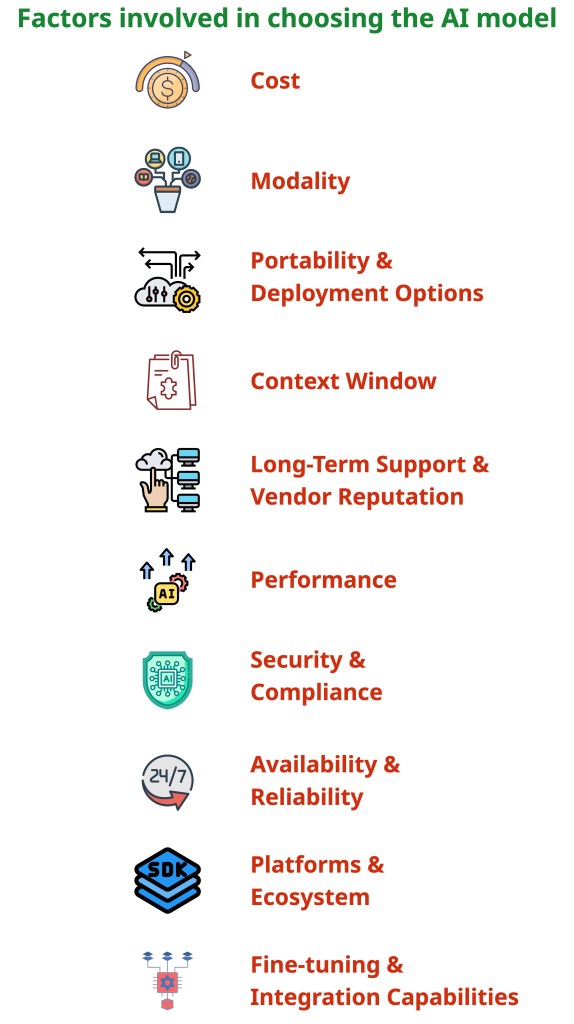

Therefore, selecting the right base or foundation model is crucial in establishing the right AI journey and transformation. What are the factors that we need to consider while choosing the base model?

1) Cost: The costs involved in choosing the AI model are multi-dimensional.

- Model Modality & Size: Costs can vary significantly based on the complexity of the modality (text, image, video, or multimodal) and the size of the model (i.e., the number of parameters). Larger, more complex models typically incur higher operational costs.

- Context Window: Models with larger context windows might have different pricing tiers due to higher processing requirements.

- Infrastructure: The cost isn’t just the model itself but also the infrastructure needed to host and run it efficiently (e.g., GPUs, TPUs). This includes development, testing, and production environments.

- Pricing Models:

- Pay-as-you-go: Common for API-based access to foundational models, often priced per token, per image, or per API call.

- Provisioned Throughput: Some cloud vendors offer dedicated capacity for fine-tuned models or when consistent high performance is required, which can involve a fixed cost for a certain level of performance.

- Open Source & Self-Hosting: While the model might be free, you bear the whole infrastructure, maintenance, and operational costs.

- Fine-tuning & Training: If you plan to fine-tune a model, factor in the costs associated with data preparation, training runs, and experimentation.

2) Modality: Modality refers to the different types of data the model can understand (input) and generate (output). The different types of modalities are –

- Text: It is used in a simple chat-type request-response interface.

- Image: Generating image, modifying image, question against an image.

- Audio: Live translation, speech recognition, natural language processing.

- Video: Action recognition, video summarization, object tracking.

- Multimodal: Models that can process and relate information from multiple modalities simultaneously (e.g., understanding an image and generating a text description or answering questions about a video).

3) Portability & Deployment Options: Portability refers to the ease with which a model can be deployed, run, and managed across different environments.

- Cloud-Specific vs Cloud-Agnostic: Some cloud providers offer a marketplace to choose the models and deploy them with ease, making it a tight integration with the cloud provider. Sometimes, the models themselves are easily deployable across various clouds or on-premises.

- On-Premise Deployment: If data sovereignty or specific security requirements necessitate on-premise deployment, ensure the model supports this.

- Edge Deployment: Choose models that can be deployed in cloud accessible via API and also the lighter version that runs on devices like mobile apps, IoT devices.

- Containerization & Orchestration: Support for technologies like Docker and Kubernetes can significantly simplify deployment and management.

4) Context Window: The context window is the amount of information (measured in tokens) that a model can accept as input and generate as output in a single interaction.

- Tokens: Tokens are pieces of words or sub-words that models use to process text. For example, ‘AI transformation’ might be broken down into tokens like ‘AI’, ‘transform’, ‘ation’.

- Impact: A larger context window allows the model to consider more information from the input (e.g., longer documents, more detailed prompts, extensive chat history) to generate more relevant, coherent, and contextually aware responses. However, larger context windows can also lead to increased processing time and cost.

5) Long-Term Support & Vendor Reputation: AI models are not static; they evolve. Choose a model that is likely to be maintained and improved over time.

- Vendor/Community Backing: Opt for models backed by reputable organizations (e.g., established AI research labs, major tech companies) or strong open-source communities with a track record of updates, bug fixes, and enhancements.

- Roadmap & Vision: Look for the provider’s long-term vision for the model and its environment.

- Deprecation Policies: Understand how the model versioning works and how the provider plan to support and manage the versions.

6) Performance: Model performance is critical for user satisfaction and achieving business objectives.

- Accuracy & Relevance: How well the model performs its intended task (e.g., correctness of answers, quality of generated content, precision/recall for classification tasks). Evaluate this on datasets relevant to your specific use case.

- Latency: The time it takes for the model to generate a response after receiving a request. Low latency is crucial for real-time applications.

- Throughput: Determine the number of requests the model can serve over a given period of time.

- Scalability: The model’s ability to handle more inference without degradation in performance.

- Benchmarking: It is important to do a self assessment of the different models and benchmark it for future references.

7) Security & Compliance: This is important as there were incidents of IP’s and proprietary informations getting leaked.

- Data Security: Ensure the model provider has strong data handling policies, especially if you are sending data to a third-party API. For self-hosted or fine-tuned models, implement security for your data pipelines and infrastructure.

- Model Integrity & Robustness: The model should be resistant to adversarial attacks (inputs designed to fool the model) and data poisoning.

- Ethical Considerations & Guardrails: The model should support mechanisms to filter harmful, biased, or inappropriate content. Look for features like multi-level guardrails and the ability to customize safety settings.

- Extensibility and Modification (Open/Closed Principle Adaptation): The model should be “open for extension” (e.g., through techniques like Retrieval Augmented Generation (RAG) or integrating with other systems) but “closed for modification” in terms of its core vulnerabilities or unauthorized external tampering.

- Compliance: Ensure the model and its usage complies with industry compliance standards like CPNA, GDPR, HIPAA, etc.

8) Availability & Reliability: For production systems, especially those that are customer-facing, the model needs to be consistently available.

- Uptime SLAs: Choose the right infrastructure for a high uptime of the models.

- Redundancy & Failover: Choose multi region and multi availability zone for higher availability.

9) Platforms & Ecosystem Offered: Consider the broader ecosystem and platform support surrounding the model.

- Development Tools & SDKs: Availability of Software Development Kits (SDKs) in your preferred programming languages, along with comprehensive documentation and developer tools, can significantly speed up integration.

- Supported Frameworks: Compatibility with popular AI/ML frameworks (e.g., TensorFlow, PyTorch, Hugging Face Transformers) can be beneficial for development and customization.

- Monitoring & Management Tools: Look for tools that facilitate the monitoring of model performance, usage, and overall health in a production environment..

- Community & Support Channels: A strong community or readily available vendor support can be invaluable for troubleshooting and best practices.

10) Fine-tuning and Integration Capabilities: While foundational models are powerful, you often need to adapt them to your specific domain or tasks.

- Ease of Fine-tuning: Assess how easy it is to fine-tune the model with your own data. Consider the data requirements, computational resources needed, and the complexity of the fine-tuning process.

- Integration APIs & SDKs: The model should offer well-documented and robust APIs or SDKs for seamless integration into your existing applications, data pipelines, and workflows.

- Adaptability Techniques: Beyond fine-tuning, explore support for techniques like Retrieval Augmented Generation (RAG) for incorporating external knowledge, or prompt engineering best practices to steer model behavior effectively.

Share your experience on choosing the factors to decide the right AI model for your enterprise needs.

Happy learning!